The previous episodes have illustrated that working with the DispatchWorkItem class is a bit more complex than submitting a block of work to a dispatch queue. But you get several benefits in return. Control and flexibility are the most important advantages of the DispatchWorkItem class. In this episode, I'd like to show you a few additional benefits if you choose to work with dispatch work items.

Initialization Options

A DispatchWorkItem instance is nothing more than an object that manages a block of work. Earlier in this series, we initialized a DispatchWorkItem instance in the ImageTableViewCell class. The initializer accepts one argument, a closure or a block of work. The dispatch work item manages the closure and ensures that it is submitted to a dispatch queue.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(block: {

...

})

The initializer of the DispatchWorkItem class defines a few optional parameters, which we ignored in the previous episodes. The first optional parameter is the quality of service class of the dispatch work item. We cover quality of service classes in more detail in the next episode. Remember from earlier in this series that a quality of service class communicates to Grand Central Dispatch the importance of the block of work. Grand Central Dispatch takes the quality of service class into account to decide when its most opportune to execute the block of work.

Open ImageTableViewCell.swift and navigate to the configure(title:url:) method. Create a DispatchWorkItem instance by invoking the initializer and pass utility as the first argument, the quality of service class of the dispatch work item.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(qos: .utility, block: {

...

})

The initializer also accepts one or more flags that define the behavior of the dispatch work item. A few of these flags define how Grand Central Dispatch should determine the quality of service class of the dispatch work item. Let me give you an example. In some scenarios, it isn't clear at the moment of initialization what the quality of service class of the dispatch work item should be. It then makes more sense to have the dispatch work item inherit the quality of service class of the dispatch queue it is submitted to. You can do that by passing the inheritQoS flag to the initializer of the DispatchWorkItem class.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(flags: [.inheritQoS], block: {

...

})

In the next episode, we explore quality of service classes in more detail. In that episode, you'll learn that quality of service classes can be modified by Grand Central Dispatch, depending on a number of factors. If you want to ensure that Grand Central Dispatch respects the quality of service class you set for a dispatch work item, then you pass the enforceQoS flag to the initializer of the DispatchWorkItem class.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(qos: .userInitiated ,flags: [.enforceQoS], block: {

...

})

Even though the quality of service class and the dispatch work item flags are optional parameters, they allow you to carefully define the behavior of the dispatch work item. You may not need them very often, but they can prove invaluable in some scenarios.

Performing a Dispatch Work Item

You already know how to submit a dispatch work item to a dispatch queue for execution. Instead of submitting the dispatch work item to a dispatch queue, you can execute the dispatch work item by invoking the perform() method. While the API looks appealing, be careful, because the result isn't always what you want. Let's undo the changes we made earlier and invoke the perform() method on the dispatch work item instead of submitting it to a dispatch queue.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(block: {

...

})

if let workItem = workItem {

// Perform Dispatch Work Item

workItem.perform()

// Update Fetch Data Work Item

fetchDataWorkItem = workItem

}

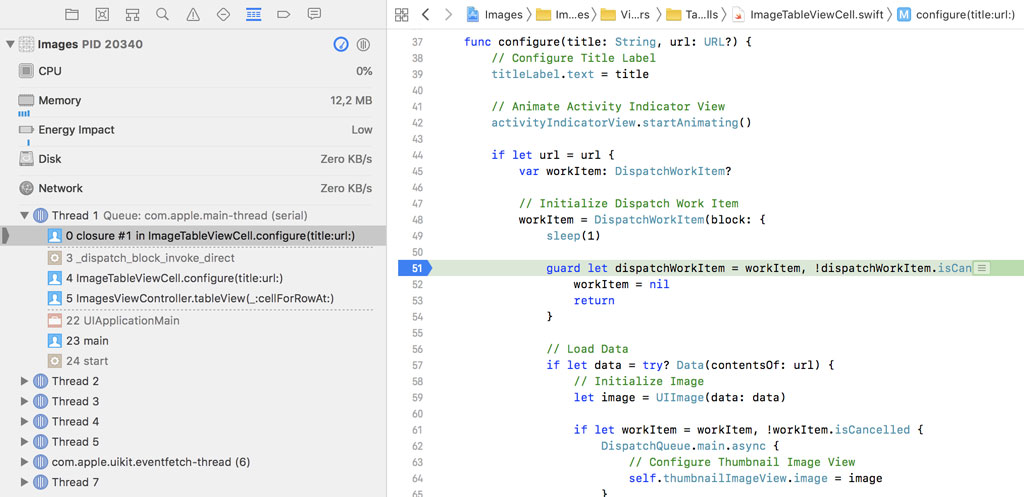

Add a breakpoint to the guard statement in the closure of the dispatch work item. Run the application and open the Debug Navigator on the left.

Does it surprise you that the closure of the dispatch work item is executed on the main thread? By invoking the perform() method, the dispatch work item is immediately executed on the calling thread, which is the main thread in this example.

Let's submit a block of work to a dispatch queue and execute the dispatch work item in the closure by invoking the perform() method. Run the application and open the Debug Navigator on the left.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(block: {

...

})

if let workItem = workItem {

DispatchQueue.global().async {

// Perform Dispatch Work Item

workItem.perform()

}

// Update Fetch Data Work Item

fetchDataWorkItem = workItem

}

The closure that is passed to the async(execute:) method is executed on a background or worker thread. This means that the dispatch work item is also executed on that background thread if the perform() method is invoked.

Waiting for Completion

One of the downsides of submitting a block of work to a dispatch queue is not knowing when the block of work has finished executing. That's another advantage the DispatchWorkItem class has over submitting a block of work to a dispatch queue. If you want to be notified when a dispatch work item has finished executing, then the notify method is your answer.

Grand Central Dispatch offers two options. When a dispatch work item has finished executing, you can choose to execute (1) a block of work or (2) another dispatch work item. The notify method accepts a dispatch queue as its first argument. That is the dispatch queue to which the block of work or the dispatch work item is submitted.

If you choose to pass a block of work to the notify method, then you can optionally specify the quality of service class of the block of work and you can also set one or more dispatch work item flags. The interface is similar to the initializer of the DispatchWorkItem class we explored earlier in this episode.

If you choose to pass a dispatch work item to the notify method, then you only need to specify the dispatch queue to which it needs to be submitted and the dispatch work item. The quality of service class and any dispatch work item flags are encapsulated in the DispatchWorkItem class.

Let's keep it simple. We invoke the notify(queue:execute:) method, passing in the main dispatch queue as the first argument and a closure as the second argument. In the closure, we print the URL of the remote resource.

if let workItem = workItem {

// Submit Dispatch Work Item to Dispatch Queue

DispatchQueue.global().async(execute: workItem)

workItem.notify(queue: .main) {

print(url)

}

// Update Fetch Data Work Item

fetchDataWorkItem = workItem

}

Remove the breakpoint we set earlier, run the application, and inspect the output in the console. Scroll the table view up and down. The closure that is passed to the notify(queue:execute:) method is also invoked for dispatch work items that were cancelled. This can be useful if you need to clean up resources even if the dispatch work item was cancelled before it was scheduled for execution.

This is interesting to know. Remember from the previous episode that we ran into several memory management issues. We ended up using a workaround using the sleep() function. The notify(queue:execute:) method could be the solution we've been looking for. Let's refactor the implementation of the configure(title:url:) method.

We start by removing the first and the last line of the closure that is passed to the initializer of the DispatchWorkItem class. We won't be using the sleep() function as a workaround and we also won't be setting the workItem variable to nil in the closure of the dispatch work item.

// Initialize Dispatch Work Item

workItem = DispatchWorkItem(block: {

guard let dispatchWorkItem = workItem, !dispatchWorkItem.isCancelled else {

workItem = nil

return

}

// Load Data

if let data = try? Data(contentsOf: url) {

// Initialize Image

let image = UIImage(data: data)

if let workItem = workItem, !workItem.isCancelled {

DispatchQueue.main.async {

// Configure Thumbnail Image View

self.thumbnailImageView.image = image

}

}

}

})

The next step is taking advantage of the asyncAfter(deadline:execute:) method to add a delay to the execution of the dispatch work item. We define a delay of half a second.

if let workItem = workItem {

// Submit Dispatch Work Item to Dispatch Queue

DispatchQueue.global().asyncAfter(deadline: .now() + 0.5, execute: workItem)

// Update Fetch Data Work Item

fetchDataWorkItem = workItem

}

If we run the application as is, then we end up with a large number of leaking DispatchWorkItem instances. We discovered that in the previous episode. The solution is simple. We invoke the notify(queue:execute:) method and, in the closure that we pass to the method, we set the workItem variable to nil. By breaking the strong reference, the dispatch work item can be deallocated.

if let workItem = workItem {

// Submit Dispatch Work Item to Dispatch Queue

DispatchQueue.global().asyncAfter(deadline: .now() + 0.5, execute: workItem)

// Update Fetch Data Work Item

fetchDataWorkItem = workItem

}

// Clean Up

workItem?.notify(queue: .main) {

workItem = nil

}

Run the application one more time, scroll the table view up and down, and wait for the table view to be populated with images. Click the Debug Memory Graph button in the debug bar at the bottom and open the Debug Navigator on the left. It shows a list of the objects that are currently in memory. Use the text field at the bottom to search for any DispatchWorkItem instances that are still in memory. There should be no leaking dispatch work items.

Waiting for Completion

There is one last feature of the DispatchWorkItem class I'd like to discuss. It can be useful to block the current thread until a dispatch work item has finished executing. This can be useful if the application requires a resource to continue its execution.

It's possible to block the current thread by invoking the wait() method of the DispatchWorkItem class. This is similar to invoking the sync(execute:) method of the DispatchQueue class. The API is a bit different, though.

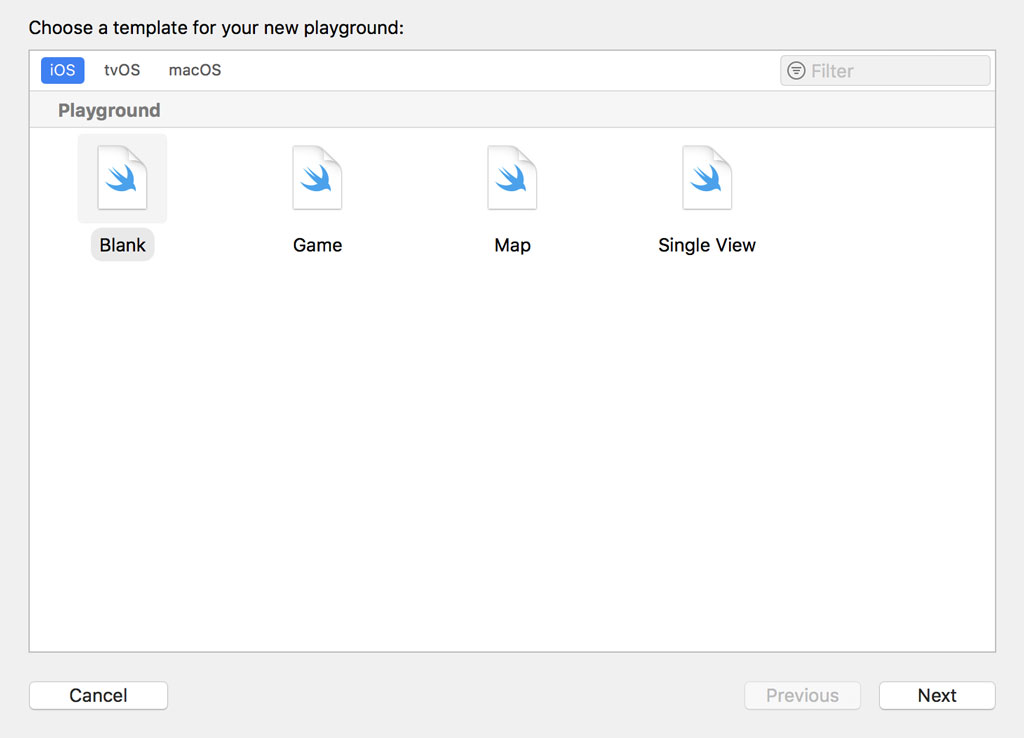

Let's use a playground to explore the API. Create a new playground by choosing the Blank template from the iOS section.

Remove the contents of the playground and add an import statement for the Foundation framework.

import Foundation

We create a DispatchWorkItem instance and store a reference in a constant, workItem. In the closure that is passed to the initializer, we initialize a Data instance. The Data instance is initialized with the contents of a remote resource. We use the exclamation mark and the try! keyword in this example. We're not concerned with safety in this example. We output the number of bytes of the Data instance to the console.

import Foundation

let workItem = DispatchWorkItem {

let data = try! Data(contentsOf: URL(string: "https://cdn.cocoacasts.com/7ba5c3e7df669703cd7f0f0d4cefa5e5947126a8/1.jpg")!)

print(data.count)

}

To execute the dispatch work item, we submit it to a global dispatch queue. This should look familiar.

import Foundation

let workItem = DispatchWorkItem {

let data = try! Data(contentsOf: URL(string: "https://cdn.cocoacasts.com/7ba5c3e7df669703cd7f0f0d4cefa5e5947126a8/1.jpg")!)

print(data.count)

}

DispatchQueue.global().async(execute: workItem)

To understand the flow of execution of the playground, we add a print statement before the initialization of the DispatchWorkItem instance and we add a print statement after submitting the dispatch work item to the global dispatch queue.

import Foundation

print("before")

let workItem = DispatchWorkItem {

let data = try! Data(contentsOf: URL(string: "https://cdn.cocoacasts.com/7ba5c3e7df669703cd7f0f0d4cefa5e5947126a8/1.jpg")!)

print(data.count)

}

DispatchQueue.global().async(execute: workItem)

print("after")

Execute the playground and inspect the output in the console. The output shouldn't be surprising. Because the dispatch work item is executed asynchronously, the print statements before and after the execution of the dispatch work item precede the print statement in the closure of the dispatch work item.

before

after

2328941

Let's invoke the wait() method before the last print statement. Run the playground and inspect the output in the console.

import Foundation

print("before")

let workItem = DispatchWorkItem {

let data = try! Data(contentsOf: URL(string: "https://cdn.cocoacasts.com/7ba5c3e7df669703cd7f0f0d4cefa5e5947126a8/1.jpg")!)

print(data.count)

}

DispatchQueue.global().async(execute: workItem)

workItem.wait()

print("after")

Remember what I mentioned earlier. The wait() method is similar to the sync(execute:) method of the DispatchQueue class. The wait() method blocks the execution of the current thread until the dispatch work item has finished executing. That is why the last print statement is executed after the size of the Data instance is printed to the console.

before

2328941

after

But there's more to the wait() method. Grand Central Dispatch defines three variants. We already know the behavior of the wait() method. If we invoke the wait(timeout:) method or the wait(wallTimeout:) method, we can define a timeout. What does that mean? If the timeout that is passed to the wait method is exceeded, execution of the current thread continues. This is useful if the application is waiting for a resource, but it doesn't want to wait indefinitely.

Give it a try. We invoke the wait(timeout:) method and define a timeout of one second. To understand the behavior of the wait(timeout:) method, we invoke the sleep() function in the closure that is passed to the initializer of the DispatchWorkItem instance.

import Foundation

print("before")

let workItem = DispatchWorkItem {

sleep(5)

let data = try! Data(contentsOf: URL(string: "https://cdn.cocoacasts.com/7ba5c3e7df669703cd7f0f0d4cefa5e5947126a8/1.jpg")!)

print(data.count)

}

DispatchQueue.global().async(execute: workItem)

workItem.wait(timeout: .now() + 1.0)

print("after")

The output looks like this after five seconds.

before

after

2328941

Keep in mind that the current thread continues execution as soon as the dispatch work item has finished executing. Remove the sleep() function from the closure of the dispatch work item and set the timeout to ten seconds. Execute the playground one more time.

import Foundation

print("before")

let workItem = DispatchWorkItem {

let data = try! Data(contentsOf: URL(string: "https://cdn.cocoacasts.com/7ba5c3e7df669703cd7f0f0d4cefa5e5947126a8/1.jpg")!)

print(data.count)

}

DispatchQueue.global().async(execute: workItem)

workItem.wait(timeout: .now() + 10.0)

print("after")

The output is different because the dispatch work item finishes executing before the timeout is exceeded.

before

2328941

after

I already mentioned that Grand Central Dispatch defines two types of timeouts. What is the difference between wait(timeout:) and wait(wallTimeout:)? DispatchTime represents a relative point in time whereas DispatchWallTime represents an absolute point in time. What does that mean?

DispatchWallTime isn't affected by the system. If a point in time is specified using DispatchWallTime, then that point in time is absolute. It doesn't change if the system goes to sleep.

This isn't true for DispatchTime. If we specify a point in time ten minutes from now and the system goes to sleep for one hour after five minutes, then that point in time is also moved into the future by one hour.

What's Next?

The DispatchWorkItem class has a powerful API, offering control and flexibility. The API may seem complex, but the possibilities are quite amazing.

In the next episode, we take a close look at a subject that is often overlooked or ignored by developers, quality of service classes. Understanding and correctly applying quality of service classes is essential if your goal is to build a robust application that takes advantage of Grand Central Dispatch.